| Type: | Package |

| Title: | Causal Modeling with Coincidence Analysis |

| Version: | 4.0.3 |

| Date: | 2025-06-02 |

| Description: | Provides comprehensive functionalities for causal modeling with Coincidence Analysis (CNA), which is a configurational comparative method of causal data analysis that was first introduced in Baumgartner (2009) <doi:10.1177/0049124109339369>, and generalized in Baumgartner & Ambuehl (2020) <doi:10.1017/psrm.2018.45>. CNA is designed to recover INUS-causation from data, which is particularly relevant for analyzing processes featuring conjunctural causation (component causation) and equifinality (alternative causation). CNA is currently the only method for INUS-discovery that allows for multiple effects (outcomes/endogenous factors), meaning it can analyze common-cause and causal chain structures. Moreover, as of version 4.0, it is the only method of its kind that provides measures for model evaluation and selection that are custom-made for the problem of INUS-discovery. |

| License: | GPL-2 | GPL-3 [expanded from: GPL (≥ 2)] |

| URL: | https://CRAN.R-project.org/package=cna |

| Depends: | R (≥ 4.1.0) |

| Imports: | Rcpp, utils, stats, Matrix, matrixStats, car |

| LinkingTo: | Rcpp |

| Suggests: | dplyr, frscore, causalHyperGraph |

| NeedsCompilation: | yes |

| LazyData: | yes |

| Maintainer: | Mathias Ambuehl <mathias.ambuehl@consultag.ch> |

| Author: | Mathias Ambuehl [aut, cre, cph], Michael Baumgartner [aut, cph], Ruedi Epple [ctb], Veli-Pekka Parkkinen [ctb], Alrik Thiem [ctb] |

| Packaged: | 2025-06-02 05:28:52 UTC; MAM |

| Repository: | CRAN |

| Date/Publication: | 2025-06-02 06:30:02 UTC |

cna: A Package for Causal Modeling with Coincidence Analysis

Description

Coincidence Analysis (CNA) is a configurational comparative method of causal data analysis that was first introduced for crisp-set (i.e. binary) data in Baumgartner (2009a, 2009b, 2013) and generalized for multi-value and fuzzy-set data in Baumgartner and Ambuehl (2020). The cna package implements the method's latest stage of development.

CNA infers causal structures as defined by modern variants of the so-called INUS-theory (Mackie 1974; Grasshoff and May 2001; Baumgartner and Falk 2023a) from empirical data. The INUS-theory is a type-level difference-making theory that spells out causation in terms of redundancy-free Boolean dependency structures. It is optimally suited for the analysis of causal structures with the following features: conjunctivity—causes are arranged in complex bundles that only become operative when all of their components are properly co-instantiated, each of which in isolation is ineffective or leads to different outcomes—and disjunctivity—effects can be brought about along alternative causal routes such that, when suppressing one route, the effect may still be produced via another one.

Causal structures featuring conjunctivity and disjunctivity pose challenges for

methods of causal data analysis. As many theories of causation (other than the INUS-theory) entail that it is necessary (though not sufficient) for X to be a cause of Y that there be some kind of dependence (e.g. probabilistic or counterfactual) between X and Y, standard methods (e.g. Spirtes et al. 2000) infer that X is not a cause of Y if X and Y are not pairwise dependent.

However, there often are no dependencies between an individual component X of a conjunctive cause and the corresponding effect Y (for concrete examples see the package vignette (accessed from R by typing vignette("cna")). In the absence of pairwise dependencies, X can only be identified as a cause of Y if it is embedded in a complex Boolean structure over many factors and that structure is fitted to the data as a whole. But the space of Boolean functions over even a handful of factors is vast. So, a method for INUS-discovery must find ways to efficiently navigate in that vast space of possibilities. That is the purpose of CNA.

CNA is not the only method for the discovery of INUS structures. Other methods that can be used for that purpose are Logic Regression (Ruczinski et al. 2003, Kooperberg and Ruczinski 2005), which is implemented in the R package LogicReg, and Qualitative Comparative Analysis (Ragin 1987; 2008; Cronqvist and Berg-Schlosser 2009), whose most powerful implementations are provided by the R packages QCApro and QCA. But CNA is the only method for INUS-discovery that can process data generated by causal structures with more than one outcome and, hence, can analyze common-cause and causal chain structures as well as causal cycles and feedbacks. Moreover, as of version 4.0 of the cna package, it is the only method of its kind that offers measures for model evaluation and selection that are custom-made for the problem of INUS-discovery. Finally, unlike the models produced by Logic Regression or Qualitative Comparative Analysis, CNA's models are guaranteed to be redundancy-free, which makes them directly causally interpretable in terms of the INUS-theory; and CNA is more successful than any other method at exhaustively uncovering all INUS models that fit the data equally well. For comparisons of CNA with Qualitative Comparative Analysis and Logic Regression see (Baumgartner and Ambuehl 2020; Swiatczak 2022) and (Baumgartner and Falk 2023b), respectively.

There exist three additional R packages for data analysis with CNA: frscore, which automatizes robustness scoring of CNA models, causalHyperGraph, which visualizes CNA models as causal graphs, and cnaOpt, which systematizes the search for optimally fitting CNA models.

Details

| Package: | cna |

| Type: | Package |

| Version: | 4.0.3 |

| Date: | 2025-06-02 |

| License: | GPL (>= 2) |

Author(s)

Authors:

Mathias Ambuehl

mathias.ambuehl@consultag.ch

Michael Baumgartner

Department of Philosophy

University of Bergen

michael.baumgartner@uib.no

Maintainer:

Mathias Ambuehl

References

Baumgartner, Michael. 2009a. “Inferring Causal Complexity.” Sociological Methods & Research 38(1):71-101. doi:10.1177/0049124109339369

Baumgartner, Michael. 2009b. “Uncovering Deterministic Causal Structures: A Boolean Approach.” Synthese 170(1):71-96.

Baumgartner, Michael. 2013. “Detecting Causal Chains in Small-n Data.” Field Methods 25 (1):3-24. doi:10.1177/1525822X12462527

Baumgartner, Michael and Mathias Ambuehl. 2020. “Causal Modeling with Multi-Value and Fuzzy-Set Coincidence Analysis.” Political Science Research and Methods. 8:526-542. doi:10.1017/psrm.2018.45

Baumgartner, Michael and Christoph Falk. 2023a. “Boolean Difference-Making: A Modern Regularity Theory of Causation.” The British Journal for the Philosophy of Science, 74(1), 171-197. doi:10.1093/bjps/axz047

Baumgartner Michael and Christoph Falk. 2023b. “Configurational Causal Modeling and Logic Regression.” Multivariate Behavioral Research, 58:2, 292-310. doi:10.1080/00273171.2021.1971510

Cronqvist, Lasse, Dirk Berg-Schlosser. 2009. “Multi-Value QCA (mvQCA).” In B Rihoux, CC Ragin (eds.), Configurational Comparative Methods: Qualitative Comparative Analysis (QCA) and Related Techniques, pp. 69-86. Sage Publications, London.

Grasshoff, Gerd and Michael May. 2001. “Causal Regularities.” In W. Spohn, M. Ledwig, and M. Esfeld (Eds.). Current Issues in Causation, pp. 85-114. Paderborn: Mentis.

Mackie, John L. 1974. The Cement of the Universe: A Study of Causation. Oxford: Oxford University Press.

Kooperberg, Charles and Ingo Ruczinski. 2005. “Identifying Interacting SNPs Using Monte Carlo Logic Regression.” Genetic Epidemiology, 28(2):157-170.doi:10.1002/gepi.20042

Ragin, Charles C. 1987. The Comparative Method. Berkeley: University of California Press.

Ragin, Charles C. 2008. Redesigning Social Inquiry: Fuzzy Sets and Beyond. Chicago: University of Chicago Press.

Ruczinski, Ingo, Charles Kooperberg, and Michael LeBlanc. 2003. “Logic Regression.” Journal of Computational and Graphical Statistics 12:475-511. doi:10.1198/1061860032238

Spirtes, Peter, Clark Glymour, and Richard Scheines. 2000. “Causation, Prediction, and Search.” 2 edition. MIT Press, Cambridge.

Swiatczak, Martyna. 2022. “Different Algorithms, Different Models.” Quality & Quantity 56:1913-1937.

Perform Coincidence Analysis

Description

The cna function performs Coincidence Analysis to identify atomic solution formulas (asf) consisting of minimally necessary disjunctions of minimally sufficient conditions of all outcomes in the data

and combines the recovered asf to complex solution formulas (csf) representing multi-outcome structures, e.g. common-cause and/or causal chain structures.

Usage

cna(x, outcome = TRUE, con = 1, cov = 1, maxstep = c(3, 4, 10),

measures = c("standard consistency", "standard coverage"),

ordering = NULL, strict = FALSE, exclude = character(0), notcols = NULL,

what = if (suff.only) "m" else "ac", details = FALSE,

suff.only = FALSE, acyclic.only = FALSE, cycle.type = c("factor", "value"),

verbose = FALSE, control = NULL, ...)

Arguments

x |

Data frame or |

outcome |

Character vector specifying one or several factor values that are to be considered as potential outcome(s). For crisp- and fuzzy-set data, factor values are expressed by upper and lower cases, for multi-value data, they are expressed by the "factor=value" notation.

Defaults to |

con |

Numeric scalar between 0 and 1 to set the threshold for the sufficiency measure selected in |

cov |

Numeric scalar between 0 and 1 to set the threshold for the necessity measure selected in |

maxstep |

Vector of three integers; the first specifies the maximum number of conjuncts in each disjunct of an asf, the second specifies the maximum number of disjuncts in an asf, the third specifies the maximum complexity of an asf. The complexity of an asf is

the total number of exogenous factor value appearances in the asf. Default: |

measures |

Character vector of length 2. |

ordering |

Character string or list of character vectors specifying the causal ordering of

the factors in |

strict |

Logical; if |

exclude |

Character vector specifying factor values to be excluded as possible causes of certain outcomes. For instance, |

notcols |

Character vector of factors to be negated in |

what |

Character string specifying what to print; |

details |

A character vector specifying the evaluation measures and additional solution attributes to be computed. Possible elements are all the measures in |

suff.only |

Logical; if |

acyclic.only |

Logical; if |

cycle.type |

Character string specifying what type of cycles to be detected: |

verbose |

Logical; if |

control |

Argument for fine-tuning and modifying the CNA algorithm (in ways that are not relevant for the ordinary user). See |

... |

Arguments for fine-tuning; passed to |

Details

The first input x of the cna function is a data frame or a configuration table. The data can be crisp-set (cs), fuzzy-set (fs), or multi-value (mv). Factors in cs data can only take values from {0,1}, factors in fs data can take on any (continuous) values from the unit interval [0,1], while factors in mv data can take on any of an open (but finite) number of non-negative integers as values. To ensure that no misinterpretations of returned asf and csf can occur, users are advised to exclusively use upper case letters as factor (column) names. Column names may contain numbers, but the first sign in a column name must be a letter. Only ASCII signs should be used for column and row names.

A data frame or configuration table x is the sole mandatory input of the cna function. In particular, cna does not need an input specifying which factor(s) in x are endogenous, it tries to infer that from the data. But if it is known prior to the analysis what factors have values that can figure as outcomes, an outcome specification can be passed to cna via the argument outcome, which takes as input a character vector identifying one or several factor values as potential outcome(s). For cs and fs data, outcomes are expressed by upper and lower cases (e.g. outcome = c("A", "b")). If factor names have multiple letters, any upper case letter is interpreted as 1, and the absence of upper case letters as 0 (i.e. outcome = c("coLd", "shiver") is interpreted as COLD=1 and SHIVER=0). For mv data, factor values are assigned by the “factor=value” notation (e.g. outcome = c("A=1","B=3")). Defaults to outcome = TRUE, which means that all factor values in x are potential outcomes.

When the data x contain multiple potential outcomes, it may moreover be known, prior to the analysis, that these outcomes have a certain causal ordering, meaning that some of them are causally upstream of the others. Such information can be passed to cna by means of the argument ordering, which takes either a character string or a list of character vectors as value. For example, ordering = "A, B < C" or, equivalently, ordering = list(c("A", "B"), "C") determines that factor C is causally located downstream of factors A and B, meaning that no values of C are potential causes of values of A and B. In consequence, cna only checks whether values of A and B can be modeled as causes of values of C; the test for a causal dependency in the other direction is skipped.

An ordering does not need to explicitly mention all factors in x. If only a subset of the factors are included in the ordering, the non-included factors are entailed to be upstream of the included ones. Hence, ordering = "C" means that C is located downstream of all other factors in x.

The argument strict determines whether the elements of one level in an ordering can be causally related or not. For example, if ordering = "A, B < C" and strict = TRUE, then the values of A and B—which are on the same level of the ordering—are excluded to be causally related and cna skips corresponding tests. By contrast, if ordering = "A, B < C" and strict = FALSE, then cna also searches for dependencies among the values of A and B. The default is strict = FALSE.

An ordering excludes all values of a factor as potential causes of an outcome. But a user may only be in a position to exclude some (not all) values as potential causes. Such information can be passed to cna through the argument exclude, which can be assigned a vector of character strings featuring the factor values to be excluded as causes to the left of the "->" sign and the corresponding outcomes on the right. For example, exclude = "A=1,C=3 -> B=1" determines that the value 1 of factor A and the value 3 of factor C are excluded as causes of the value 1 of factor B. Factor values can be excluded as potential causes of multiple outcomes as follows: exclude = c("A,c -> B", "b,H -> D"). For cs and fs data, upper case letters are interpreted as 1, lower case letters as 0. If factor names have multiple letters, any upper case letter is interpreted as 1, and the absence of upper case letters as 0. For mv data, the "factor=value" notation is required.

To exclude all values of a factor as potential causes of an outcome or to exclude a factor value as potential cause of all values of some endogenous factor, a "*" can be appended to the corresponding factor name; for example: exclude = "A* -> B" or exclude = "A=1,C=3 -> B*".

The exclude argument can be used both independently of and in conjunction with outcome and ordering, but if assignments to outcome and ordering contradict assignments to exclude, the latter are ignored. If exclude is assigned values of factors that do not appear in the data x, an error is returned.

If no outcomes are specified and no causal ordering is provided, all factor values in x are treated as potential outcomes; more specifically, in case of cs and fs data, cna tests for all factors whether their presence (i.e. them taking the value 1) can be modeled as an outcome, and in case of mv data, cna tests for all factors whether any of their possible values can be modeled as an outcome. That is done by searching for redundancy-free Boolean functions (in disjunctive normal form) that account for the behavior of an outcome in accordance with exclude.

The core Boolean dependence relations exploited for that purpose are sufficiency and necessity. To assess whether the (typically noisy) data warrant inferences to sufficiency and necessity, cna draws on evaluation measures for sufficiency and necessity, which can be selected via the argument measures, expecting a character vector of length 2. The first element, measures[1], specifies the measure to be used for sufficiency evaluation, and measures[2] specifies the measure to be used for necessity evaluation. All eight available evaluation measures can be printed to the console through showConCovMeasures. Four of them are sufficiency measures—variants of consistency (Ragin 2006)—, and four are necessity measures—variants of coverage (Ragin 2006). They implement different approaches for assessing whether the evidence in the data justifies an inference to sufficiency or necessity, respectively (cf. De Souter 2024; De Souter & Baumgartner 2025). The default is measures = c("standard consistency", "standard coverage"). More details are provided in section 3.2 of the cna package vignette (call vignette("cna")).

Against that background, cna first identifies, for each potential outcome in x, all minimally sufficient conditions (msc) that meet the threshold given to the selected sufficiency measure in the argument con. Then, these msc are disjunctively combined to minimally necessary conditions that meet the threshold for the selected necessity measure given to the argument cov, such that the whole disjunction meets con. The default value for con and cov is 1. The expressions resulting from this procedure are the atomic solution formulas (asf) for every factor value that can be modeled as an outcome. Asf represent causal structures with one outcome. To model structures with more than one outcome, the recovered asf are conjunctively combined to complex solution formulas (csf). To build its models, cna uses a bottom-up search algorithm, which we do not reiterate here (see Baumgartner and Ambuehl 2020 or the section 4 of vignette("cna")).

As the combinatorial search space of this algorithm is often too large to be exhaustively scanned in reasonable time, the argument maxstep allows for setting an upper bound for the complexity of the generated asf. maxstep takes a vector of three integers c(i, j, k) as input, entailing that the generated asf have maximally j disjuncts with maximally i conjuncts each and a total of maximally k factor value appearances (k is the maximal complexity). The default is maxstep = c(3, 4, 10).

Note that when the data x feature noise, the default con and cov thresholds of 1 will often not yield any asf. In such cases, con and cov may be set to values below 1. con and cov should neither be set too high, in order to avoid overfitting, nor too low, in order to avoid underfitting. The overfitting danger is severe in causal modeling with CNA (and configurational causal modeling more generally). For a discussion of this problem see Parkkinen and Baumgartner (2023), who also introduce a procedure for robustness assessment that explores all threshold settings in a given interval—in an attempt to reduce both over- and underfitting. See also the R package frscore.

If verbose is set to its non-default value TRUE, some information about the progression of the algorithm is returned to the console during the execution of the cna function. The execution can easily be interrupted by ESC at all stages.

The default output of cna first lists the provided ordering (if any), second, the pre-identified outcomes (if any), third, the implemented sufficiency and necessity measures, fourth, the recovered asf, and fifth, the csf. Asf and csf are ordered by complexity and the product of their con and cov scores.

For asf and csf, three attributes are standardly computed: con, cov, and complexity. The first two correspond to a solution's scores on the selected sufficiency and necessity measures, and the complexity score amounts to the number of factor value appearances on the left-hand sides of “->” or “<->” in asf and csf.

Apart from the evaluation measures used for model building through the measures argument, cna can also return the solution scores on all other available evaluation measures. This is accomplished by giving the details argument a character vector containing the names or aliases of the evaluation measures to be computed. For example, if details = c("ccon", "ccov", "PAcon", "AAcov"), the output of cna contains additional columns presenting the scores of the solutions on the requested measures.

In addition to measures evaluating the evidence for sufficiency and necessity, cna can calculate a number of further solution attributes: exhaustiveness, faithfulness, coherence, and cyclic all of which are recovered by requesting them through the details argument. Explanations of these attributes can be found in sections 5.2 to 5.4 of vignette("cna").

The argument notcols is used to calculate asf and csf

for negative outcomes in data of type cs and fs (in mv data notcols has no meaningful interpretation and, correspondingly, issues an error message). If notcols = "all", all factors in x are negated,

i.e. their membership scores i are replaced by 1-i. If notcols is given a character vector

of factors in x, only the factors in that vector are negated. For example, notcols = c("A", "B")

determines that only factors A and B are negated. The default is no negations, i.e. notcols = NULL.

suff.only is applicable whenever a complete cna analysis cannot be performed for reasons of computational complexity. In such a case, suff.only = TRUE forces cna to stop the analysis after the identification of msc, which will normally yield results even in cases when a complete analysis does not terminate. In that manner, it is possible to shed at least some light on the dependencies among the factors in x, in spite of an incomputable solution space.

The argument control provides a number of options to fine-tune and modify the CNA algorithm and the output of cna. It expects a list generated by the function cnaControl as input, for example, control = cnaControl(inus.only = FALSE, inus.def = c("equivalence"), con.msc = 0.8). The available fine-tuning parameters are documented here: cnaControl. All of the arguments in cnaControl can also be passed to the cna function directly via .... They all have default values yielding the standard behavior of cna, which do not have to be changed by the ordinary CNA user.

The argument what regulates what items of the output of cna are printed. It has no effect on the computations that are performed when executing cna; it only determines how the result is printed. See print.cna for more information on what.

Value

cna returns an object of class “cna”, which amounts to a list with the following elements:

call: | the executed function call |

x: | the processed data frame or configuration table, as input to cna |

ordering | the ordering imposed on the factors in the configuration table (if not NULL) |

configTable: | a “configTable” containing the the input data |

solution: | the solution object, which itself is composed of lists exhibiting msc and asf for all |

| outcome factors | |

measures: | the evaluation con- and cov-measures used for model-building |

what: | the values given to the what argument |

...: | plus additional list elements conveying more details on the function call and the |

| performed coincidence analysis. |

Note

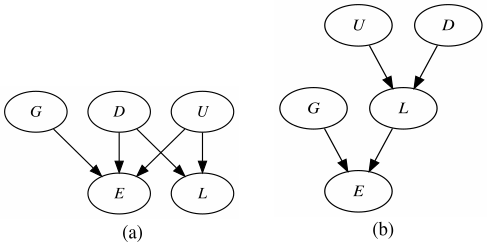

In the first example described below (in Examples), the two resulting complex solution formulas represent a common cause structure and a causal chain, respectively. The common cause structure is graphically depicted in figure (a) below, the causal chain in figure (b).

References

Aleman, Jose. 2009. “The Politics of Tripartite Cooperation in New Democracies: A Multi-level Analysis.” International Political Science Review 30 (2):141-162.

Basurto, Xavier. 2013. “Linking Multi-Level Governance to Local Common-Pool Resource Theory using Fuzzy-Set Qualitative Comparative Analysis: Insights from Twenty Years of Biodiversity Conservation in Costa Rica.” Global Environmental Change 23(3):573-87.

Baumgartner, Michael. 2009. “Inferring Causal Complexity.” Sociological Methods & Research 38(1):71-101.

Baumgartner, Michael and Mathias Ambuehl. 2020. “Causal Modeling with Multi-Value and Fuzzy-Set Coincidence Analysis.” Political Science Research and Methods. 8:526–542.

Baumgartner, Michael and Christoph Falk. 2023. “Boolean Difference-Making: A Modern Regularity Theory of Causation.” The British Journal for the Philosophy of Science, 74(1), 171-197. doi:10.1093/bjps/axz047.

De Souter, Luna. 2024. “Evaluating Boolean Relationships in Configurational ComparativeMethods.” Journal of Causal Inference 12(1). doi:10.1515/jci-2023-0014.

De Souter, Luna and Michael Baumgartner. 2025. “New sufficiency and necessity measures for model building with Coincidence Analysis.” Zenodo. https://doi.org/10.5281/zenodo.13619580

Hartmann, Christof, and Joerg Kemmerzell. 2010. “Understanding Variations in Party Bans in Africa.” Democratization 17(4):642-65.

Krook, Mona Lena. 2010. “Women's Representation in Parliament: A Qualitative Comparative Analysis.” Political Studies 58(5):886-908.

Mackie, John L. 1974. The Cement of the Universe: A Study of Causation. Oxford: Oxford University Press.

Parkkinen, Veli-Pekka and Michael Baumgartner. 2023. “Robustness and Model Selection in Configurational Causal Modeling.” Sociological Methods & Research, 52(1), 176-208.

Ragin, Charles C. 2006. “Set Relations in Social Research: Evaluating Their Consistency and Coverage.” Political Analysis 14(3):291-310.

Wollebaek, Dag. 2010. “Volatility and Growth in Populations of Rural Associations.” Rural Sociology 75:144-166.

See Also

print.cna, configTable, condition, cyclic, condTbl, selectCases, makeFuzzy, some,

randomConds, is.submodel, is.inus, showMeasures, redundant, full.ct, d.educate,

d.women,

d.pban,d.autonomy, d.highdim

Examples

# Ideal crisp-set data from Baumgartner (2009) on education levels in western democracies

# ----------------------------------------------------------------------------------------

# Exhaustive CNA without constraints on the search space; print atomic and complex

# solution formulas (default output).

cna.educate <- cna(d.educate)

cna.educate

# The two resulting complex solution formulas represent a common cause structure

# and a causal chain, respectively. The common cause structure is graphically depicted

# in (Note, figure (a)), the causal chain in (Note, figure (b)).

# Build solutions with other than standard evaluation measures.

cna(d.educate, measures = c("ccon", "ccov"))

cna(d.educate, measures = c("PAcon", "PACcov"))

# CNA with negations of the factors E and L.

cna(d.educate, notcols = c("E","L"))

# The same by use of the outcome argument.

cna(d.educate, outcome = c("e","l"))

# CNA with negations of all factors.

cna(d.educate, notcols = "all")

# Print msc, asf, and csf with additional evaluation measures and solution attributes.

cna(d.educate, what = "mac", details = c("ccon","ccov","PAcon","PACcov","exhaustive"))

cna(d.educate, what = "mac", details = c("e","f","AACcon","AAcov"))

cna(d.educate, what = "mac", details = TRUE)

# Print solutions without spaces before and after "+".

options(spaces = c("<->", "->" ))

cna(d.educate, details = c("e", "f"))

# Print solutions with spaces before and after "*".

options(spaces = c("<->", "->", "*" ))

cna(d.educate, details = c("e", "f", "PAcon", "PACcov"))

# Restore the default of the option "spaces".

options(spaces = c("<->", "->", "+"))

# Crisp-set data from Krook (2010) on representation of women in western-democratic

# parliaments

# -----------------------------------------------------------------------------------

# This example shows that CNA can distinguish exogenous and endogenous factors in the

# data. Without being told which factor is the outcome, CNA reproduces the original

# QCA of Krook (2010).

ana1 <- cna(d.women, measures = c("PAcon", "PACcov"), details = c("e", "f"))

ana1

# The two resulting asf only reach an exhaustiveness score of 0.438, meaning that

# not all configurations that are compatible with the asf are contained in the data

# "d.women". Here is how to extract the configurations that are compatible with

# the first asf but are not contained in "d.women".

library(dplyr)

setdiff(ct2df(selectCases(asf(ana1)$condition[1], full.ct(d.women))),

d.women)

# Highly ambiguous crisp-set data from Wollebaek (2010) on very high volatility of

# grassroots associations in Norway

# --------------------------------------------------------------------------------

# csCNA with ordering from Wollebaek (2010) [Beware: due to massive ambiguities,

# this analysis will take about 20 seconds to compute.]

cna(d.volatile, ordering = "VO2", maxstep = c(6, 6, 16))

# Using suff.only, CNA can be forced to abandon the analysis after minimization of

# sufficient conditions. [This analysis terminates quickly.]

cna(d.volatile, ordering = "VO2", maxstep = c(6, 6, 16), suff.only = TRUE)

# Similarly, by using the default maxstep, CNA can be forced to only search for asf

# and csf with reduced complexity.

cna(d.volatile, ordering = "VO2")

# ordering = "VO2" only excludes that the values of VO2 are causes of the values

# of the other factors in d.volatile, but cna() still tries to model other factor

# values as outcomes. The following call determines that only VO2 is a possible

# outcome. (This call terminates quickly.)

cna(d.volatile, outcome = "VO2")

# We can even increase maxstep.

cna(d.volatile, outcome = "VO2", maxstep=c(4,4,16))

# If it is known that, say, el and od cannot be causes of VO2, we can exclude this.

cna(d.volatile, outcome = "VO2", maxstep=c(4,4,16), exclude = "el, od -> VO2")

# The verbose argument returns information during the execution of cna().

cna(d.volatile, ordering = "VO2", verbose = TRUE)

# Multi-value data from Hartmann & Kemmerzell (2010) on party bans in Africa

# ---------------------------------------------------------------------------

# mvCNA with an outcome specification taken from Hartmann & Kemmerzell

# (2010); standard coverage threshold at 0.95 (standard consistency threshold at 1),

# maxstep at c(6, 6, 10).

cna.pban <- cna(d.pban, outcome = "PB=1", cov = .95, maxstep = c(6, 6, 10),

what = "all")

cna.pban

# The previous function call yields a total of 14 asf and csf, only 5 of which are

# printed in the default output. Here is how to extract all 14 asf and csf.

asf(cna.pban)

csf(cna.pban)

# [Note that all of these 14 causal models reach better consistency and

# coverage scores than the one model Hartmann & Kemmerzell (2010) present in their

# paper, which they generated using the TOSMANA software, version 1.3.

# T=0 + T=1 + C=2 + T=1*V=0 + T=2*V=0 <-> PB=1]

condTbl("T=0 + T=1 + C=2 + T=1*V=0 + T=2*V=0 <-> PB = 1", d.pban)

# Extract all minimally sufficient conditions with further details.

msc(cna.pban, details = c("ccon", "ccov", "PAcon", "PACcov"))

# Alternatively, all msc, asf, and csf can be recovered by means of the nsolutions

# argument of the print function, which also allows for adding details.

print(cna.pban, nsolutions = "all", details = c("AACcon", "AAcov", "ex", "fa"))

# Print the configuration table with the "cases" column.

print(cna.pban, what = "t", show.cases = TRUE)

# Build solution formulas with maximally 4 disjuncts.

cna(d.pban, outcome = "PB=1", cov = .95, maxstep = c(4, 4, 10))

# Use non-standard evaluation measures for solution building.

cna(d.pban, outcome = "PB=1", cov = .95, measures = c("PAcon", "PACcov"))

# Only print 2 digits of standard consistency and coverage scores.

print(cna.pban, digits = 2)

# Build all but print only two msc for each factor and two asf and csf.

print(cna(d.pban, outcome = "PB=1", cov = .95,

maxstep = c(6, 6, 10), what = "all"), nsolutions = 2)

# Lowering the thresholds on standard consistency and coverage yields further

# models with excellent fit scores; print only asf.

cna(d.pban, outcome = "PB=1", con = .93, what = "a", maxstep = c(6, 6, 10))

# Lowering both standard consistency and coverage.

cna(d.pban, outcome = "PB=1", con = .9, cov =.9, maxstep = c(6, 6, 10))

# Lowering both standard consistency and coverage and excluding F=0 as potential

# cause of PB=1.

cna(d.pban, outcome = "PB=1", con = .9, cov =.9, maxstep = c(6, 6, 10),

exclude = "F=0 -> PB=1")

# Specifying an outcome is unnecessary for d.pban. PB=1 is the only

# factor value in those data that could possibly be an outcome.

cna(d.pban, con=.9, cov = .9, maxstep = c(6, 6, 10))

# Fuzzy-set data from Basurto (2013) on autonomy of biodiversity institutions in Costa Rica

# ---------------------------------------------------------------------------------------

# Basurto investigates two outcomes: emergence of local autonomy and endurance thereof. The

# data for the first outcome are contained in rows 1-14 of d.autonomy, the data for the second

# outcome in rows 15-30. For each outcome, the author distinguishes between local ("EM",

# "SP", "CO"), national ("CI", "PO") and international ("RE", "CN", "DE") conditions. Here,

# we first apply fsCNA to replicate the analysis for the local conditions of the endurance of

# local autonomy.

dat1 <- d.autonomy[15:30, c("AU","EM","SP","CO")]

cna(dat1, ordering = "AU", strict = TRUE, con = .9, cov = .9)

# The CNA model has significantly better consistency (and equal coverage) scores than the

# model presented by Basurto (p. 580): SP*EM + CO <-> AU, which he generated using the

# fs/QCA software.

condition("SP*EM + CO <-> AU", dat1) # both EM and CO are redundant to account for AU

# If we allow for dependencies among the conditions by setting strict = FALSE, CNA reveals

# that SP is a common cause of both AU and EM.

cna(dat1, ordering = "AU", strict = FALSE, con = .9, cov = .9)

# Here are two analyses at different con/cov thresholds for the international conditions

# of autonomy endurance.

dat2 <- d.autonomy[15:30, c("AU","RE", "CN", "DE")]

cna(dat2, ordering = "AU", con = .9, cov = .85)

cna(dat2, ordering = "AU", con = .85, cov = .9, details = TRUE)

# Here are two analyses of the whole dataset using different evaluation measures.

# They show that across the whole period 1986-2006, the best causal model of local

# autonomy (AU) renders that outcome dependent only on local direct spending (SP).

cna(d.autonomy, outcome = "AU", con = .85, cov = .9,

maxstep = c(5, 5, 11), details = TRUE)

cna(d.autonomy, outcome = "AU", measures = c("AACcon","AAcov"), con = .85, cov = .9,

maxstep = c(5, 5, 11), details = TRUE)

# High-dimensional data

# ---------------------

# Here's an analysis of the data d.highdim with 50 factors, massive

# fragmentation, and 20% noise. (Takes about 15 seconds to compute.)

head(d.highdim)

cna(d.highdim, outcome = c("V13", "V11"), con = .8, cov = .8)

# By lowering maxstep, computation time can be reduced to less than 1 second

# (at the cost of an incomplete solution).

cna(d.highdim, outcome = c("V13", "V11"), con = .8, cov = .8,

maxstep = c(2,3,10))

# Highly ambiguous artificial data to illustrate exhaustiveness and acyclic.only

# ------------------------------------------------------------------------------

mycond <- "(D + C*f <-> A)*(C*d + c*D <-> B)*(B*d + D*f <-> C)*(c*B + B*f <-> E)"

dat1 <- selectCases(mycond)

ana1 <- cna(dat1, details = c("e","cy"))

# There exist almost 2M csf. This is how to build the first 927 of them, with

# additional messages about the csf building process.

first.csf <- csf(ana1, verbose = TRUE)

first.csf

# Most of these csf are compatible with more configurations than are contained in

# dat1. Only 141 csf in first.csf are perfectly exhaustive (i.e. all compatible

# configurations are contained in dat1).

subset(first.csf, exhaustiveness == 1)

# All of the csf in first.csf contain cyclic substructures.

subset(first.csf, cyclic == TRUE)

# Here's how to build acyclic csf.

ana2 <- cna(dat1, details = c("e","cy"), acyclic.only = TRUE)

csf(ana2, verbose = TRUE)

Deprecated functions in the cna package

Description

These functions are provided for compatibility with older versions of the cna package only, and may be removed eventually. They have become obsolete since the introduction of the default setting type = "auto" in package version 3.2.0.

Usage

csct(...)

mvct(...)

fsct(...)

cscna(...)

mvcna(...)

fscna(...)

cscond(...)

mvcond(...)

fscond(...)

Arguments

... |

In |

Details

csct(x, ...), mvct(x, ...), and fsct(x, ...) are shorthands for configTable(x, type = "cs", ...), configTable(x, type = "mv", ...) and configTable(x, type = "fs", ...), respectively.

cscna(x, ...), mvcna(x, ...), and fscna(x, ...) are shorthands for cna(x, type = "cs", ...), cna(x, type = "mv", ...) and cna(x, type = "fs", ...), respectively.

cscond(x, ct, ...), mvcond(x, ct, ...), and fscond(x, ct, ...) are shorthands for condition(x, ct, type = "cs", ...), condition(x, ct, type = "mv", ...) and condition(x, ct, type = "fs", ...), respectively.

See Also

Internal functions in the cna package

Description

These functions are mainly for internal purposes and will not normally be called directly by the user.

Overview of Functions

noblanks,lhs,rhs,extract_asf:-

Manipulation of character vectors containing conditions and atomic solution formulas.

ctInfo:-

Alternative internal represenation of a

configTable. qcond_bool,qcond_asf,qcond_csf:-

Fast evaluation of the well-formedness of conditions, asf, and csf.

getCondType,getComplexity:-

Extract condition type and complexity values from a character vector containing conditions.

stdCond,matchCond:-

Standardize and match conditions.

relist1,hstrsplit,C_mconcat,C_mconcat,C_recCharList2char,C_relist_Int:-

Utility functions for conversion between different represenations of conditions.

C_redund:-

Internal version of the test for structural redundancy (used in

redundant). getCond:-

Derive a condition from a data set.

rreduce:-

Eliminate redundancies from a disjunctive normal form.

redundant,minimalizeCsf:-

Identify structurally redundant asf in a csf and eliminate them.

C_is_submodel:-

Internal core of the function

is.submodel. .det,.inus,.exff,.redund,.inCsf:-

These are generic auxiliary functions used internally within other functions.

Extract solutions from an object of class “cna”

Description

Given a solution object x produced by cna, msc(x) extracts all minimally sufficient conditions, asf(x) all atomic solution formulas, and csf(x, n.init) builds approximately n.init complex solution formulas. All solution attributes (details) available in showMeasures can be computed. The three functions return a data frame with the additional class attribute “condTbl”.

Usage

msc(x, details = x$details, cases = FALSE)

asf(x, details = x$details)

csf(x, n.init = 1000, details = x$details, asfx = NULL,

inus.only = x$control$inus.only, inus.def = x$control$inus.def,

minimalizeCsf = inus.only,

acyclic.only = x$acyclic.only, cycle.type = x$cycle.type, verbose = FALSE)

Arguments

x |

Object of class “cna”. |

details |

A character vector specifying the evaluation measures and additional solution attributes to be computed. Possible elements are all the measures in |

cases |

Logical; if |

n.init |

Integer capping the amount of initial asf combinations. Default at 1000. Serves to control the computational complexity of the csf building process. |

asfx |

Object of class “condTbl” produced by the |

inus.only |

Logical; if |

inus.def |

Character string specifying the definition of partial structural redundancy to be applied. Possible values are "implication" or "equivalence". The strings can be abbreviated. Cf. also |

minimalizeCsf |

Logical; if |

acyclic.only |

Logical; if |

cycle.type |

Character string specifying what type of cycles to be detected: |

verbose |

Logical; if |

Details

Depending on the processed data, the solutions (models) output by cna are often ambiguous, to the effect that many atomic and complex solutions fit the data equally well. To facilitate the inspection of the cna output, however, cna standardly returns only 5 minimally sufficient conditions (msc) and 5 atomic solution formulas (asf) for each outcome as well as 5 complex solution formulas (csf). msc can be used to extract all msc from an object x of class “cna”, asf to extract all asf, and csf to build approximately n.init csf from the asf stored in x. All solution attributes (details) that are saved in x are recovered as well. Moreover, all evaluation measures and solution attributes available in showMeasures—irrespective of whether they are saved in x—can be computed by specifying them in the details argument.

The outputs of msc, asf, and csf can be further processed by the condition function.

While msc and asf merely extract information stored in x, csf builds csf from the inventory of asf recovered at the end of the third stage of the cna algorithm (cf. vignette("cna"), section 4). That is, the csf function implements the fourth stage of that algorithm. It proceeds in a stepwise manner as follows.

-

n.initpossible conjunctions featuring one asf of every outcome are built. If

inus.only = TRUEorminimalizeCsf = TRUE, the solutions resulting from step 1 are freed of structural redundancies (cf. Baumgartner and Falk 2023).If

inus.only = TRUE, tautologous and contradictory solutions as well as solutions with partial structural redundancies (as defined ininus.def) and constant factors are eliminated. [Ifinus.only = FALSEandminimalizeCsf = TRUE, only structural redundancies are eliminated, meaning only step 2, but not step 3, is executed.]If

acyclic.only = TRUE, solutions with cyclic substructures are eliminated.Solutions that are a submodel of another solution are removed.

For those solutions that were modified in the previous steps, the scores on the selected evaluation

measuresare re-calculated and solutions that no longer reachconorcovare eliminated (cf.cna).The remaining solutions are returned as csf, ordered by complexity and the product of their scores on the evaluation

measures.

Value

msc, asf and csf return objects of class “condTbl”, an object similar to a data.frame, which features the following components:

outcome: | the outcomes |

condition: | the relevant conditions or solutions |

con: | the scores on the sufficiency measure (e.g. consistency) |

cov: | the scores on the necessity measure (e.g. coverage) |

complexity: | the complexity scores (number of factor value appearances to the left of “<->”) |

...: | scores on additional evaluation measures and solution attributes as specified in |

details

|

References

Lam, Wai Fung, and Elinor Ostrom. 2010. “Analyzing the Dynamic Complexity of Development Interventions: Lessons from an Irrigation Experiment in Nepal.” Policy Sciences 43 (2):1-25.

See Also

cna, configTable, condition, condTbl, cnaControl, is.inus, detailMeasures, showMeasures, cyclic, d.irrigate

Examples

# Crisp-set data from Lam and Ostrom (2010) on the impact of development interventions

# ------------------------------------------------------------------------------------

# CNA with causal ordering that corresponds to the ordering in Lam & Ostrom (2010); coverage

# cut-off at 0.9 (consistency cut-off at 1).

cna.irrigate <- cna(d.irrigate, ordering = "A, R, F, L, C < W", cov = .9,

maxstep = c(4, 4, 12), details = TRUE)

cna.irrigate

# The previous function call yields a total of 12 complex solution formulas, only

# 5 of which are returned in the default output.

# Here is how to extract all 12 complex solution formulas along with all

# solution attributes.

csf(cna.irrigate)

# With only the used evaluation measures and complexity plus exhaustiveness and faithfulness.

csf(cna.irrigate, details = c("e", "f"))

# Calculate additional evaluation measures from showCovCovMeasures().

csf(cna.irrigate, details = c("e", "f", "PAcon", "PACcov", "AACcon", "AAcov"))

# Extract all atomic solution formulas.

asf(cna.irrigate, details = c("e", "f"))

# Extract all minimally sufficient conditions.

msc(cna.irrigate) # capped at 20 rows

print(msc(cna.irrigate), n = Inf) # prints all rows

# Add cases featuring the minimally sufficient conditions combined

# with the outcome.

(msc.table <- msc(cna.irrigate, cases = TRUE))

# Render as data frame.

as.data.frame(msc.table)

# Extract only the conditions (solutions).

csf(cna.irrigate)$condition

asf(cna.irrigate)$condition

msc(cna.irrigate)$condition

# A CNA of d.irrigate without outcome specification and ordering is even more

# ambiguous.

cna2.irrigate <- cna(d.irrigate, cov = .9, maxstep = c(4,4,12),

details = c("e", "f", "ccon", "ccov"))

# Reduce the initial asf combinations to 50.

csf(cna2.irrigate, n.init = 50)

# Print the first 20 csf.

csf(cna2.irrigate, n.init = 50)[1:20, ]

# Print details about the csf building process.

csf(cna.irrigate, verbose = TRUE)

# Return evaluation measures and solution attributes with 5 digits.

print(asf(cna2.irrigate), digits = 5)

# Further examples

# ----------------

# An example generating structural redundancies.

target <- "(A*B + C <-> D)*(c + a <-> E)"

dat1 <- selectCases(target)

ana1 <- cna(dat1, maxstep = c(3, 4, 10))

# Run csf with elimination of structural redundancies.

csf(ana1, verbose = TRUE)

# Run csf without elimination of structural redundancies.

csf(ana1, verbose = TRUE, inus.only = FALSE)

# An example generating partial structural redundancies.

dat2 <- data.frame(

A=c(0,0,0,0,1,1,1,1,0,0,0,0,1,1,1,1,1,1,1,1,1,1,1,1,1,0, 1),

B=c(0,0,1,1,0,0,0,0,0,0,1,0,0,0,0,0,0,0,0,0,0,0,0,0,0,1,1),

C=c(1,1,0,0,0,1,0,0,1,1,0,1,1,0,1,1,0,1,1,1,0,1,0,1,0,1,0),

D=c(0,1,1,1,0,1,1,1,0,0,0,1,0,1,0,0,0,1,0,0,0,1,1,0,0,1,0),

E=c(1,0,0,0,0,1,1,1,1,1,1,0,0,1,0,0,0,1,1,1,1,0,0,0,0,1,1),

F=c(1,1,1,1,1,0,0,0,0,0,0,0,0,1,1,1,1,0,0,0,0,0,0,0,0,0,0),

G=c(1,1,1,1,1,1,1,1,1,1,1,1,1,0,0,0,0,0,0,0,0,0,0,0,0,1,1))

ana2 <- cna(dat2, con = .8, cov = .8, maxstep = c(3, 3, 10))

# Run csf without elimination of partial structural redundancies.

csf(ana2, inus.only = FALSE, verbose = TRUE)

# Run csf with elimination of partial structural redundancies.

csf(ana2, verbose = TRUE)

# Prior to version 3.6.0, the "equivalence" definition of partial structural

# redundancy was used by default (see ?is.inus() for details). Now, the

# "implication" definition is used. To replicate old behavior

# set inus.def to "equivalence".

csf(ana2, verbose = TRUE, inus.def = "equivalence")

# The two definitions only come apart in case of cyclic structures.

# Build only acyclic models.

csf(ana2, verbose = TRUE, acyclic.only = TRUE)

# Add further details.

csf(ana2, verbose = TRUE, acyclic.only = TRUE, details = c("PAcon", "PACcov"))

Fine-tuning and modifying the CNA algorithm

Description

The cnaControl function provides a number of arguments for fine-tuning and modifying the CNA algorithm as implemented in the cna function. The arguments can also be passed directly to the cna function. All arguments in cnaControl have default values that should be left unchanged for most CNA applications.

Usage

cnaControl(inus.only = TRUE, inus.def = c("implication","equivalence"),

type = "auto", con.msc = NULL,

rm.const.factors = FALSE, rm.dup.factors = FALSE,

cutoff = 0.5, border = "up", asf.selection = c("cs", "fs", "none"),

only.minimal.msc = TRUE, only.minimal.asf = TRUE, maxSol = 1e+06)

Arguments

inus.only |

Logical; if |

inus.def |

Character string specifying the definition of partial structural redundancy to be applied. Possible values are "implication" or "equivalence". The strings can be abbreviated. |

type |

Character vector specifying the type of the data analyzed by |

con.msc |

Numeric scalar between 0 and 1 to set the minimum threshold every msc must satisfy on the sufficiency measure selected in |

rm.const.factors, rm.dup.factors |

Logical; if |

cutoff |

Minimum membership score required for a factor to count as instantiated in the data and to be integrated into the analysis. Value in the unit interval [0,1]. The default cutoff is 0.5. Only meaningful if the data is fuzzy-set ( |

border |

Character string specifying whether factors with membership scores equal to |

asf.selection |

Character string specifying how to select asf based on outcome variation in configurations incompatible with a model. |

only.minimal.msc |

Logical; if |

only.minimal.asf |

Logical; if |

maxSol |

Maximum number of asf calculated. The default value should normally not be changed by the user. |

Details

When the inus.only argument takes its default value TRUE, the cna function only returns solution formulas—asf and csf—that are freed of all types of redundancies: redundancies in sufficient and necessary conditions as well as structural and partial structural redundancies. Moreover, tautologous and contradictory solutions and solutions featuring constant factors are eliminated (cf. is.inus). In other words, at inus.only = TRUE, cna issues so-called MINUS-formulas only (cf. vignette("cna") for details). MINUS-formulas are causally interpretable. In some research contexts, however, solution formulas with redundancies might be of interest, for example, when the analyst is not searching for causal models but for models with maximal data fit. In such cases, the inus.only argument can be set to its non-default value FALSE.

The notion of a partial structural redundancy (PSR) can be defined in two different ways, which can be selected through the inus.def argument. If inus.def = "implication" (default), a solution formula is treated as containing a PSR iff it logically implies a proper submodel of itself. If inus.def = "equivalence", a PSR obtains iff the solution formula is logically equivalent with a proper submodel of itself. The character string passed to inus.def can be abbreviated. To reproduce results produced by versions of the cna package prior to 3.6.0, inus.def may have to be set to "equivalence", which was the default in earlier versions.

The argument type allows for manually specifying the type of data passed to the cna function. The argument has the default value "auto", inducing automatic detection of the data type. But the user can still manually set the data type. Data with factors taking values 1 or 0 only are called crisp-set, which can be indicated by type = "cs". If the data contain at least one factor that takes more than two values, e.g. {1,2,3}, the data count as multi-value: type = "mv". Data featuring at least one factor taking real values from the interval [0,1] count as fuzzy-set: type = "fs". (Note that mixing multi-value and fuzzy-set factors in one analysis is not supported). One context in which users may want to set the data type manually is when they are interested in receiving models for both the presence and the absence of a crisp-set outcome from just one call of the cna function. When analyzing cs data x, cna(x, ordering = "A", type = "mv") searches for models of A=1 and A=0 at the same time, whereas the default cna(x, ordering = "A") searches for models of A=1 only.

The cna function standardly takes one threshold con for the selected sufficiency measure, e.g. consistency, that is imposed on both minimally sufficient conditions (msc) and solution formulas, asf and csf. But the analyst may want to impose a different con threshold on msc than on asf and csf. This can be accomplished by setting the argument con.msc to a different value than con. In that case, cna first builds msc using con.msc and then combines these msc to asf and to csf using con (and cov). See Examples below for a concrete context, in which this might be useful.

rm.const.factors and rm.dup.factors are used to determine the handling of constant factors, i.e. factors with constant values in all cases (rows) in the data analyzed by cna, and of duplicated factors, i.e. factors with identical value distributions in all cases in the data. If the arguments are given the value TRUE, factors with constant values are removed and all but the first of a set of duplicated factors are removed. As of package version 3.5.4, the default is FALSE for both rm.const.factors and rm.dup.factors, which means that constant and duplicated factors are not removed. See configTable for more details.

cna only includes factor configurations in the analysis that are actually instantiated in the data. The argument cutoff determines the minimum membership score required for a factor or a combination of factors to count as instantiated. It takes values in the unit interval [0,1] with a default of 0.5. border specifies whether configurations with membership scores equal to cutoff are rounded up (border = "up"), which is the default, or rounded down (border = "down").

If the data analyzed by cna feature noise, it can happen that all variation of an outcome occurs in noisy configurations in the data. In such cases, there may be asf that meet chosen con and cov thresholds (lower than 1) such that the corresponding outcome only varies in configurations that are incompatible with the strict crisp-set or fuzzy-set necessity and sufficiency relations expressed by those very asf. In the default setting "cs" of the argument asf.selection, an asf is only returned if the outcome takes a value above and below the 0.5 anchor in the configurations compatible with the strict crisp-set necessity and sufficiency relations expressed by that asf. At asf.selection = "fs", an asf is only returned if the outcome takes different values in the configurations compatible with the strict fuzzy-set necessity and sufficiency relations expressed by that asf. At asf.selection = "none", asf are returned even if outcome variation only occurs in noisy configurations. (For more details, see Examples below.)

To recover certain target structures from noisy data, it may be useful to allow cna to also consider sufficient conditions for further analysis that are not minimal (i.e. redundancy-free). This can be accomplished by setting only.minimal.msc to its non-default value FALSE. A concrete example illustrating the utility of only.minimal.msc = FALSE is provided in the Examples section below. Similarly, to recover certain target structures from noisy data, cna may need to also consider necessary conditions for further analysis that are not minimal. This is accomplished by setting only.minimal.asf to FALSE, in which case all disjunctions of msc reaching the con and cov thresholds will be returned. (The ordinary user is advised not to change the default values of either argument.)

For details on the usage of cnaControl, see the example below.

Value

A list of parameter settings.

See Also

cna, is.inus, configTable, showConCovMeasures

Examples

# cnaControl() generates a list that can be passed to the control argument of cna().

cna(d.jobsecurity, outcome = "JSR", con = .85, cov = .85, maxstep = c(3,3,9),

control = cnaControl(inus.only = FALSE, only.minimal.msc = FALSE, con.msc = .78))

# The fine-tuning arguments can also be passed to cna() directly.

cna(d.jobsecurity, outcome = "JSR", con = .85, cov = .85, maxstep = c(3,3,9),

inus.only = FALSE, only.minimal.msc = FALSE, con.msc = .78)

# Changing the set-inclusion cutoff and border rounding.

cna(d.jobsecurity, outcome = "JSR", con = .85, cov = .85,

control = cnaControl(cutoff= 0.6, border = "down"))

# Modifying the handling of constant factors.

data <- subset(d.highdim, d.highdim$V4==1)

cna(data, outcome = "V11", con=0.75, cov=0.75, maxstep = c(2,3,9),

control = cnaControl(rm.const.factors = TRUE))

# Illustration of only.minimal.msc = FALSE

# ----------------------------------------

# Simulate noisy data on the causal structure "a*B*d + A*c*D <-> E"

set.seed(1324557857)

mydata <- allCombs(rep(2, 5)) - 1

dat1 <- makeFuzzy(mydata, fuzzvalues = seq(0, 0.5, 0.01))

dat1 <- ct2df(selectCases1("a*B*d + A*c*D <-> E", con = .8, cov = .8, dat1))

# In dat1, "a*B*d + A*c*D <-> E" has the following con and cov scores.

as.condTbl(condition("a*B*d + A*c*D <-> E", dat1))

# The standard algorithm of CNA will, however, not find this structure with

# con = cov = 0.8 because one of the disjuncts (a*B*d) does not meet the con

# threshold.

as.condTbl(condition(c("a*B*d <-> E", "A*c*D <-> E"), dat1))

cna(dat1, outcome = "E", con = .8, cov = .8)

# With the argument con.msc we can lower the con threshold for msc, but this does not

# recover "a*B*d + A*c*D <-> E" either.

cna2 <- cna(dat1, outcome = "E", con = .8, cov = .8, con.msc = .78)

cna2

msc(cna2)

# The reason is that "A*c -> E" and "c*D -> E" now also meet the con.msc threshold and,

# therefore, "A*c*D -> E" is not contained in the msc---because of violated minimality.

# In a situation like this, lifting the minimality requirement via

# only.minimal.msc = FALSE allows CNA to find the intended target.

cna(dat1, outcome = "E", con = .8, cov = .8, control = cnaControl(con.msc = .78,

only.minimal.msc = FALSE))

# Overriding automatic detection of the data type

# ------------------------------------------------

# The type argument allows for manually setting the data type.

# If "cs" data are treated as "mv" data, cna() automatically builds models for all values

# of outcome factors, i.e. both positive and negated outcomes.

cna(d.educate, control = cnaControl(type = "mv"))

# Treating "cs" data as "fs".

cna(d.women, type = "fs")

# Not all manual settings are admissible.

try(cna(d.autonomy, outcome = "AU", con = .8, cov = .8, type = "mv" ))

# Illustration of asf.selection

# -----------------------------

# Consider the following data set:

d1 <- data.frame(X1 = c(1, 0, 1),

X2 = c(0, 1, 0),

Y = c(1, 1, 0))

ct1 <- configTable(d1, frequency = c(10, 10, 1))

# Both of the following asf reach con=0.95 and cov=1.

condition(c("X1+X2<->Y", "x1+x2<->Y"), ct1)

# Up to version 3.4.0 of the cna package, these two asf were inferred from

# ct1 by cna(). But the outcome Y is constant in ct1, except for a variation in

# the third row, which is incompatible with X1+X2<->Y and x1+x2<->Y. Subject to

# both of these models, the third row of ct1 is a noisy configuration. Inferring

# difference-making models that are incapable of accounting for the only difference

# in the outcome in the data is inadequate. (Thanks to Luna De Souter for

# pointing out this problem.) Hence, as of version 3.5.0, asf whose outcome only

# varies in configurations incompatible with the strict crisp-set necessity

# or sufficiency relations expressed by those asf are not returned anymore.

cna(ct1, outcome = "Y", con = 0.9)

# The old behavior of cna() can be obtained by setting the argument asf.selection

# to its non-default value "none".

cna(ct1, outcome = "Y", con = 0.9, control = cnaControl(asf.selection = "none"))

# Analysis of fuzzy-set data from Aleman (2009).

cna(d.pacts, con = .9, cov = .85)

cna(d.pacts, con = .9, cov = .85, asf.selection = "none")

# In the default setting, cna() does not return any model for d.pacts because

# the outcome takes a value >0.5 in every single case, meaning it does not change

# between presence and absence. No difference-making model should be inferred from

# such data.

# The implications of asf.selection can also be traced by

# the verbose argument:

cna(d.pacts, con = .9, cov = .85, verbose = TRUE)

Calculate the coherence of complex solution formulas

Description

Calculates the coherence measure of complex solution formulas (csf).

Usage

coherence(x, ...)

## Default S3 method:

coherence(x, ct, type, ...)

Arguments

x |

Character vector specifying an asf or csf. |

ct |

Data frame or |

type |

Character vector specifying the type of |

... |

Arguments passed to methods. |

Details

Coherence is a measure for model fit that is custom-built for complex solution formulas (csf). It measures the degree to which the atomic solution formulas (asf) combined in a csf cohere, i.e. are instantiated together in x rather than independently of one another. More concretely, coherence is the ratio of the number of cases satisfying all asf contained in a csf to the number of cases satisfying at least one asf in the csf. For example, if the csf contains the three asf asf1, asf2, asf3, coherence amounts to | asf1 * asf2 * asf3 | / | asf1 + asf2 + asf3 |, where |...| expresses the cardinality of the set of cases in x instantiating the corresponding expression. For asf, coherence returns 1. For boolean conditions (see condition), the coherence measure is not defined and coherence hence returns NA. For multiple csf that do not have a factor in common, coherence returns the minimum of the separate coherence scores.

Value

Numeric vector of coherence values to which cond is appended as a "names" attribute. If cond is a csf "asf1*asf2*asf3" composed of asf that do not have a factor in common, the csf is rendered with commas in the "names" attribute: "asf1, asf2, asf3".

See Also

cna, condition, selectCases, configTable, allCombs, full.ct, condTbl

Examples

# Perfect coherence.

dat1 <- selectCases("(A*b <-> C)*(C + D <-> E)")

coherence("(A*b <-> C)*(C + D <-> E)", dat1)

csf(cna(dat1, details = "coherence"))

# Non-perfect coherence.

dat2 <- selectCases("(a*B <-> C)*(C + D <-> E)*(F*g <-> H)")

dat3 <- rbind(ct2df(dat2), c(0,1,0,1,1,1,0,1))

coherence("(a*B <-> C)*(C + D <-> E)*(F*g <-> H)", dat3)

csf(cna(dat3, con = .88, details = "coherence"))

Methods for class “condList”

Description

The output of the condition (aka condList) function is a nested list of class “condList” that contains one or several data frames. The utilities in condList-methods are suited for rendering or reshaping these objects in different ways.

Usage

## S3 method for class 'condList'

summary(object, n = 6, ...)

## S3 method for class 'condList'

as.data.frame(x, row.names = attr(x, "cases"), optional = TRUE, nobs = TRUE, ...)

group.by.outcome(object, cases = TRUE)

Arguments

object, x |

Object of class “condList” as output by the |

n |

Positive integer: the maximal number of conditions to be printed. |

... |

Not used. |

row.names, optional |

As in |

nobs |

Logical; if |

cases |

Logical; if |

Details

The summary method for class “condList” prints the output of condition in a condensed manner. It is identical to printing with print.table = FALSE (but with a different default of argument n), see print.condList.

The output of condition is a nested list of class “condList” that contains one or several data frames. The method as.data.frame is a variant of the base method as.data.frame. It offers a convenient way of combining the columns of the data frames in a condList into one regular data frame.

Columns appearing in several tables (typically the modeled outcomes) are included only once in the resulting data frame. The output of as.data.frame has syntactically invalid column names by default, including operators such as "->" or "+".

Setting optional = FALSE converts the column names into syntactically valid names (using make.names).

group.by.outcome takes a condList as input and combines the entries in that nested list into a data frame with a larger number of columns, combining all columns concerning the same outcome into the same data frame. The additional attributes (measures, info, etc.) are thereby removed.

See Also

condition, condList, as.data.frame, make.names

Examples

# Analysis of d.irrigate data with standard evaluation measures.

ana1 <- cna(d.irrigate, ordering = "A, R, L < F, C < W", con = .9)

(ana1.csf <- condition(csf(ana1)$condition, d.irrigate))

# Convert condList to data frame.

as.data.frame(ana1.csf)

as.data.frame(ana1.csf[1]) # Include the first condition only

as.data.frame(ana1.csf, row.names = NULL)

as.data.frame(ana1.csf, optional = FALSE)

as.data.frame(ana1.csf, nobs = FALSE)

# Summary.

summary(ana1.csf)

# Analyze atomic solution formulas.

(ana1.asf <- condition(asf(ana1)$condition, d.irrigate))

as.data.frame(ana1.asf)

summary(ana1.asf)

# Group by outcome.

group.by.outcome(ana1.asf)

# Analyze minimally sufficient conditions.

(ana1.msc <- condition(msc(ana1)$condition, d.irrigate))

as.data.frame(ana1.msc)

group.by.outcome(ana1.msc)

summary(ana1.msc)

# Print more than 6 conditions.

summary(ana1.msc, n = 10)

# Analysis with different evaluation measures.

ana2 <- cna(d.irrigate, ordering = "A, R, L < F, C < W", con = .9, cov = .9,

measures = c("PAcon", "PACcov"))

(ana2.csf <- condition(csf(ana2)$condition, d.irrigate))

print(ana2.csf, add.data = d.irrigate, n=10)

as.data.frame(ana2.csf, nobs = FALSE, row.names = NULL)

summary(ana2.csf, n = 10)

Create summary tables for conditions

Description

The function condTbl returns a table of class “condTbl”, which is a data.frame summarizing selected features of specified conditions (boolean, atomic, complex), e.g. scores on evaluation measures such as consistency and coverage. In contrast to a condList, a condTbl only shows summary measures and does not provide any information at the level of individual cases in the data.

The objects output by the functions msc, asf, and csf are such tables, as well as those returned by detailMeasures.

as.condTbl reshapes a condList as output by condition and condList to a condTbl.

condTbl(x, ...) executes condList(x, ...) and then turns its output into a condTbl by applying as.condTbl.

Usage

as.condTbl(x, ...)

condTbl(x, ...)

## S3 method for class 'condTbl'

print(x, n = 20, digits = 3, quote = FALSE, row.names = TRUE,

printMeasures = TRUE, ...)

## S3 method for class 'condTbl'

as.data.frame(x, ...)

Arguments

x |

In |

n |

Maximal number of rows of the |

digits |

Number of digits to print in evaluation measures and solution attributes (cf. |

quote, row.names |

As in |

printMeasures |

Logical; if |

... |

All arguments in |

Details

The function as.condTbl takes an object of class “condList” returned by the condition function as input and reshapes it in such a way as to make it identical to the output returned by msc, asf, and csf.

The function condTbl is identical with as.condTbl(condition(...)) and as.condTbl(condList(...)), respectively.

It thus takes any set of arguments that are valid in condition and condList and transforms the result into an object of class “condTbl”.

The argument digits applies to the print method. It determines how many digits of the evaluation measures and solution attributes (e.g. standard consistency and coverage, exhaustiveness, faithfulness, or coherence) are printed. The default value is 3.

Value

The functions as.condTbl and condTbl return an object of class “condTbl”, a concise summary table featuring a set of conditions (boolean, atomic, complex), their outcomes (if the condition is an atomic or complex solution formula), and their scores on given summary measures (e.g. consistency and coverage).

Technically, an object of class “condTbl” is a data.frame with an additional class attribute "condTbl". It prints slightly differently by default than a data.frame with respect to column alignment and number of digits.

The section “Value” in cna-solutions has an enumeration of the columns that are most commonly present in a condTbl.

See Also

cna, configTable, cna-solutions, condition, condList, detailMeasures

Examples

# Candidate asf for the d.jobsecurity data.

x <- "S*R + C*l + L*R + L*P <-> JSR"

# Create summary tables.

condTbl(x, d.jobsecurity)

# Using non-standard evaluation measures.

condTbl(x, d.jobsecurity, measures = c("PAcon", "PACcov"))

# Candidate csf for the d.jobsecurity data.

x <- "(C*R + C*V + L*R <-> P)*(P + S*R <-> JSR)"

# Create summary tables.

condTbl(x, d.jobsecurity)

# Non-standard evaluation measures.

condTbl(x, d.jobsecurity, measures = c("Ccon", "Ccov"))

# Boolean conditions.

cond <- c("-(P + S*R)", "C*R + !(C*V + L*R)", "-L+(S*P)")

condTbl(cond, d.jobsecurity) # only frequencies are returned

# Do not print measures.

condTbl(x, d.jobsecurity) |> print(printMeasures = FALSE)

# Print more digits.

condTbl(x, d.jobsecurity) |> print(digits = 10)

# Print more measures.

detailMeasures(x, d.jobsecurity,

what = c("Ccon", "Ccov", "PAcon", "PACcov"))

# Analyzing d.jobsecurity with standard evaluation measures.

ana1 <- cna(d.jobsecurity, con = .8, cov = .8, outcome = "JSR")

# Reshape the output of the condition function in such a way as to make it identical to the

# output returned by msc, asf, and csf.

head(as.condTbl(condition(msc(ana1), d.jobsecurity)), 3)

head(as.condTbl(condition(asf(ana1), d.jobsecurity)), 3)

head(as.condTbl(condition(csf(ana1), d.jobsecurity)), 3)

head(condTbl(csf(ana1), d.jobsecurity), 3) # Same as preceding line

Evaluate msc, asf, and csf on the level of cases/configurations in the data

Description

The condition function provides assistance to inspect the properties of msc, asf, and csf (as returned by cna) in a data frame or configTable, but also of any other Boolean expression. The function evaluates which configurations and cases instantiate a given msc, asf, or csf and lists the scores on selected evaluation measures (e.g. consistency and coverage).

As of version 4.0 of the cna package, the function condition has been renamed condList, such that the name of the function is now identical with the class of the resulting object. Since condition remains available as an alias of condList, backward compatibility of existing code is guaranteed.

Usage

condList(x, ct = full.ct(x), ..., verbose = TRUE)

condition(x, ct = full.ct(x), ..., verbose = TRUE)

## S3 method for class 'character'

condList(x, ct = full.ct(x),

measures = c("standard consistency", "standard coverage"),

type, add.data = FALSE,

force.bool = FALSE, rm.parentheses = FALSE, ...,

verbose = TRUE)

## S3 method for class 'condTbl'

condList(x, ct = full.ct(x),

measures = attr(x, "measures"), ...,

verbose = TRUE)

## S3 method for class 'condList'

print(x, n = 3, printMeasures = TRUE, ...)

## S3 method for class 'cond'

print(x, digits = 3, print.table = TRUE,

show.cases = NULL, add.data = NULL, ...)

Arguments

x |

Character vector specifying a Boolean expression such as |

ct |

Data frame or |

measures |

Character vector of length 2. |

verbose |

Logical; if |

type |

Character vector specifying the type of |

add.data |

Logical; if |

force.bool |

Logical; if |

rm.parentheses |

Logical; if |

n |

Positive integer determining the maximal number of evaluations to be printed. |

printMeasures |

Logical; if |

digits |

Number of digits to print in the scores on the chosen evaluation |

print.table |

Logical; if |

show.cases |

Logical; if |

... |

Arguments passed to methods. |

Details

Depending on the processed data, the solutions output by cna are often ambiguous; that is, many solution formulas may fit the data equally well. If that happens, the data alone are insufficient to single out one solution. While cna simply lists all data-fitting solutions, the condition (aka condList) function provides assistance in comparing different minimally sufficient conditions (msc), atomic solution formulas (asf), and complex solution formulas (csf) in order to have a better basis for selecting among them.

Most importantly, the output of condition shows in which configurations and cases in the data an msc, asf, and csf is instantiated and not instantiated. Thus, if the user has prior causal knowledge about particular configurations or cases, the information received from condition may help identify the solutions that are consistent with that knowledge. Moreover, condition indicates which configurations and cases are covered by the different cna solutions and which are not, and the function returns the scores on selected evaluation measures for each solution.

The condition function is independent of cna. That is, any msc, asf, or csf—irrespective of whether they are output by cna—can be given as input to condition. Even Boolean expressions that do not have the syntax of CNA solution formulas can be passed to condition.

The first required input x is either an object of class “condTbl” as produced by condTbl and the functions in

cna-solutions or a character vector consisting of Boolean formulas composed of factor values that appear in data ct. ct is the second required input; it can be a configTable or a data frame. If ct is a data frame and the type argument has its default value "auto", condition first determines the data type and then converts the data frame into a configTable. The data type can also be manually specified by giving the type argument one of the values "cs", "mv", or "fs".

The measures argument is the same as in cna. Its purpose is to select the measures for evaluating whether the evidence in the data ct warrants an inference to sufficiency and necessity. It expects a character vector of length 2. The first element, measures[1], specifies the measure to be used for sufficiency evaluation, and measures[2] specifies the measure to be used for necessity evaluation. The available evaluation measures can be printed to the console through showConCovMeasures. The default measures are standard consistency and coverage. For more, see the cna package vignette (vignette("cna")), section 3.2.

The operation of conjunction can be expressed by “*” or “&”, disjunction by “+” or “|”, negation can be expressed by “-” or “!” or, in case of crisp-set or fuzzy-set data, by changing upper case into lower case letters and vice versa, implication by “->”, and equivalence by “<->”. Examples are

-

A*b -> C, A+b*c+!(C+D), A*B*C + -(E*!B), C -> A*B + a*b -

(A=2*B=4 + A=3*B=1 <-> C=2)*(C=2*D=3 + C=1*D=4 <-> E=3) -

(A=2*B=4*!(A=3*B=1)) | !(C=2|D=4)*(C=2*D=3 + C=1*D=4 <-> E=3)

Three types of conditions are distinguished:

The type boolean comprises Boolean expressions that do not have the syntactic form of CNA solution formulas, meaning the character strings in

xdo not have an “->” or “<->” as main operator. Examples:"A*B + C"or"-(A*B + -(C+d))". The expression is evaluated and written into a data frame with one column. Frequency is attached to this data frame as an attribute.The type atomic comprises expressions that have the syntactic form of atomic solution formulas (asf), meaning the corresponding character strings in the argument

xhave an “->” or “<->” as main operator. Examples:"A*B + C -> D"or"A*B + C <-> D". The expressions on both sides of “->” and “<->” are evaluated and written into a data frame with two columns. Scores on the selected evaluationmeasuresare attached to these data frames as attributes.The type complex represents complex solution formulas (csf). Example:

"(A*B + a*b <-> C)*(C*d + c*D <-> E)". Each component must be a solution formula of type atomic. These components are evaluated separately and the results stored in a list. Scores on the selected evaluationmeasuresare attached to this list.

The types of the character strings in the input x are automatically discerned and thus do not need to be specified by the user.